I am a Postdoc at the Hire Aspirations Institute working with Cynthia Dwork to improve fairness in hiring. My work focuses on examining how we can use machine learning systems to augment human intelligence, and the inverse, how we can build more human-like AI systems. I have a broader interest in studying sociotechnical systems, with a focus on how new developments in technology impact society.

My PhD was in computer science at the University of Toronto, where I worked with Ashton Anderson in the Computational Social Science Lab, while there I created Maia Chess, a chess engine that plays like a human. The Maia Chess project has had a significant impact on how chess players view chess engines, the bots I run are the first, second, and third most popular bots on lichess.org, with other versions being available on many other platforms. I am still a part of the project but recommend reaching out to the email on the Maia Chess website if you have questions about the project, as I am not currently looking for students.

I am currently on the job market looking for a faculty or research scientist position starting in the summer of 2025. If you are interested in working with me, please reach out. My research statement is available on request.

Notable Papers:

|

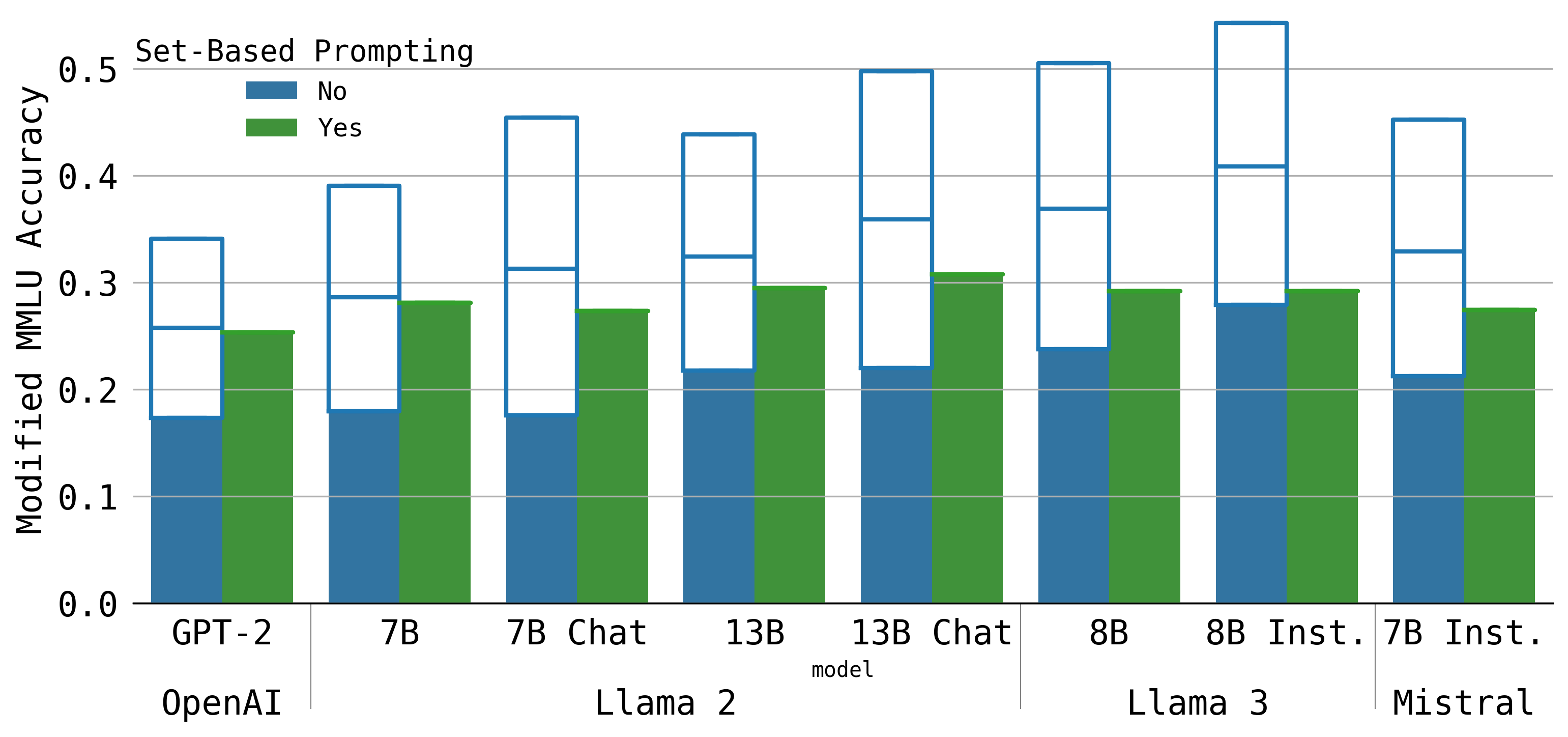

Order-Independence Without Fine Tuning Reid McIlroy-Young, Katrina Brown, Conlan Olson, Linjun Zhang, & Cynthia Dwork NeurIPS 2024 The development of generative language models that can create long and coherent textual outputs via autoregression has lead to a proliferation of uses and a corresponding sweep of analyses as researches work to determine the limitations of this new paradigm. Unlike humans, these 'Large Language Models' (LLMs) are highly sensitive to small changes in their inputs, leading to unwanted inconsistency in their behavior. One problematic inconsistency when LLMs are used to answer multiple-choice questions or analyze multiple inputs is order dependency: the output of an LLM can (and often does) change significantly when sub-sequences are swapped, despite both orderings being semantically identical. In this paper we present Set-Based Prompting, a technique that guarantees the output of an LLM will not have order dependence on a specified set of sub-sequences. We show that this method provably eliminates order dependency, and that it can be applied to any transformer-based LLM to enable text generation that is unaffected by re-orderings. Delving into the implications of our method, we show that, despite our inputs being out of distribution, the impact on expected accuracy is small, where the expectation is over the order of uniformly chosen shuffling of the candidate responses, and usually significantly less in practice. Thus, Set-Based Prompting can be used as a 'dropped-in' method on fully trained models. Finally, we discuss how our method's success suggests that other strong guarantees can be obtained on LLM performance via modifying the input representations. |

|

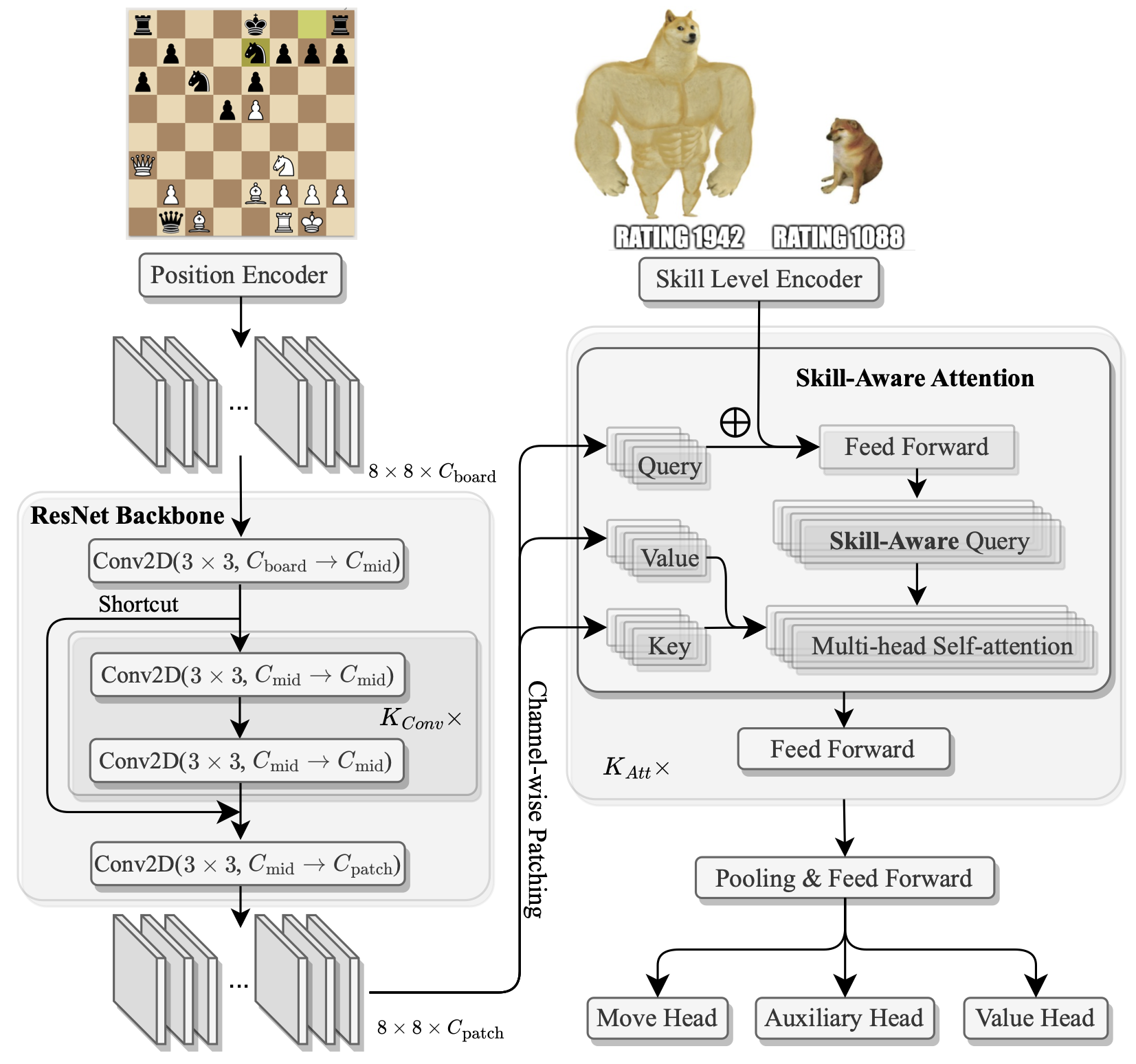

A Unified Model for Human-AI Alignment in Chess Zhenwei Tang, Difan Jiao, Reid McIlroy-Young, Jon Kleinberg, Siddhartha Sen & Ashton Anderson NeurIPS 2024 There are an increasing number of domains in which artificial intelligence (AI) systems both surpass human ability and accurately model human behavior. This introduces the possibility of algorithmically-informed teaching in these domains through more relatable AI partners and deeper insights into human decision-making. Critical to achieving this goal, however, is coherently modeling human behavior at various skill levels. Chess is an ideal model system for conducting research into this kind of human-AI alignment, with its rich history as a pivotal testbed for AI research, mature superhuman AI systems like AlphaZero, and precise measurements of skill via chess rating systems. Previous work in modeling human decision-making in chess uses completely independent models to capture human style at different skill levels, meaning they lack coherence in their ability to adapt to the full spectrum of human improvement and are ultimately limited in their effectiveness as AI partners and teaching tools. In this work, we propose a unified modeling approach for human-AI alignment in chess that coherently captures human style across different skill levels and directly captures how people improve. Recognizing the complex, non-linear nature of human learning, we introduce a skill-aware attention mechanism to dynamically integrate players' strengths with encoded chess positions, enabling our model to be sensitive to evolving player skill. Our experimental results demonstrate that this unified framework significantly enhances the alignment between AI and human players across a diverse range of expertise levels, paving the way for deeper insights into human decision-making and AI-guided teaching tools. |

|

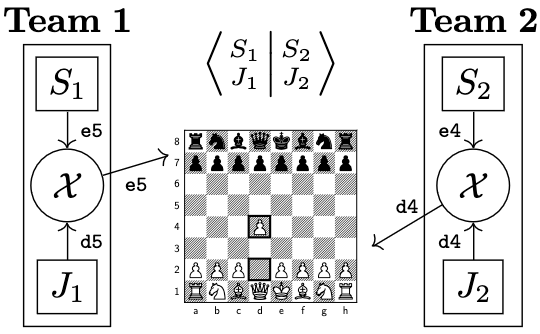

Designing Skill-Compatible AI: Methodologies and Frameworks in Chess Karim Hamade, Reid McIlroy-Young, Siddhartha Sen, Jon Kleinberg & Ashton Anderson ICLR 2024 Powerful artificial intelligence systems are often used in settings where they must interact with agents that are computationally much weaker, for example when they work alongside humans or operate in complex environments where some tasks are handled by algorithms, heuristics, or other entities of varying computational power. For AI agents to successfully interact in these settings, however, achieving superhuman performance alone is not sufficient; they also need to account for suboptimal actions or idiosyncratic style from their less-skilled counterparts. We propose a formal evaluation framework for assessing the compatibility of near-optimal AI with interaction partners who may have much lower levels of skill; we use popular collaborative chess variants as model systems to study and develop AI agents that can successfully interact with lower-skill entities. Traditional chess engines designed to output near-optimal moves prove to be inadequate partners when paired with engines of various lower skill levels in this domain, as they are not designed to consider the presence of other agents. We contribute three methodologies to explicitly create skill-compatible AI agents in complex decision-making settings, and two chess game frameworks designed to foster collaboration between powerful AI agents and less-skilled partners. On these frameworks, our agents outperform state-of-the-art chess AI (based on AlphaZero) despite being weaker in conventional chess, demonstrating that skill-compatibility is a tangible trait that is qualitatively and measurably distinct from raw performance. Our evaluations further explore and clarify the mechanisms by which our agents achieve skill-compatibility. |

|

Mimetic Models: Ethical Implications of AI that Acts Like You Reid McIlroy-Young, Siddhartha Sen, Jon Kleinberg, Solon Barocas & Ashton Anderson AIES 2022 Press: Import AI An emerging theme in artificial intelligence research is the creation of models to simulate the decisions and behavior of specific people, in domains including game-playing, text generation, and artistic expression. These models go beyond earlier approaches in the way they are tailored to individuals, and the way they are designed for interaction rather than simply the reproduction of fixed, pre-computed behaviors. We refer to these as mimetic models, and in this paper we develop a framework for characterizing the ethical and social issues raised by their growing availability. Our framework includes a number of distinct scenarios for the use of such models, and considers the impacts on a range of different participants, including the target being modeled, the operator who deploys the model, and the entities that interact with it. |

|

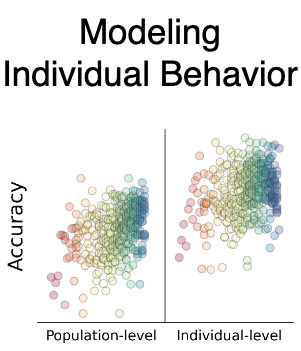

Learning Models of Individual Behavior in Chess Reid McIlroy-Young, Russell Wang, Siddhartha Sen, Jon Kleinberg & Ashton Anderson KDD 2022 AI systems that can capture human-like behavior are becoming increasingly useful in situations where humans may want to learn from these systems, collaborate with them, or engage with them as partners for an extended duration. In order to develop human-oriented AI systems, the problem of predicting human actions -- as opposed to predicting optimal actions -- has received considerable attention. Existing work has focused on capturing human behavior in an aggregate sense, which potentially limits the benefit any particular individual could gain from interaction with these systems. We extend this line of work by developing highly accurate predictive models of individual human behavior in chess. Chess is a rich domain for exploring human-AI interaction because it combines a unique set of properties: AI systems achieved superhuman performance many years ago, and yet humans still interact with them closely, both as opponents and as preparation tools, and there is an enormous corpus of recorded data on individual player games. Starting with Maia, an open-source version of AlphaZero trained on a population of human players, we demonstrate that we can significantly improve prediction accuracy of a particular player's moves by applying a series of fine-tuning methods. Furthermore, our personalized models can be used to perform stylometry -- predicting who made a given set of moves -- indicating that they capture human decision-making at an individual level. Our work demonstrates a way to bring AI systems into better alignment with the behavior of individual people, which could lead to large improvements in human-AI interaction. |

The full list is in Publications and my CV.